Describe and monitor storage performance and methods of optimization

Jumbo Frames

Due to the additional network configuration required, Nutanix does not recommend configuring jumbo frames on AHV for most deployments. However, some scenarios such as database workloads using Nutanix Acropolis Block Services (ABS) with iSCSI network connections can benefit from the larger frame sizes and reduced number of frames. To enable jumbo frames, refer to the AHV Networking best practices guide. If you choose to use jumbo frames, be sure to enable them end-to-end in the desired network and consider both the physical and virtual network infrastructure impacted by the change.

Guest VM Performance – Basics

- In hybrid clusters, AOS dynamically keeps “hot” data on SSD and “cold” data on HDD

- Every VM has a “working set” of data that it is actively manipulating.

- Key Point:

- To maximize performance, all VM working sets must fit within the SSD Tier.

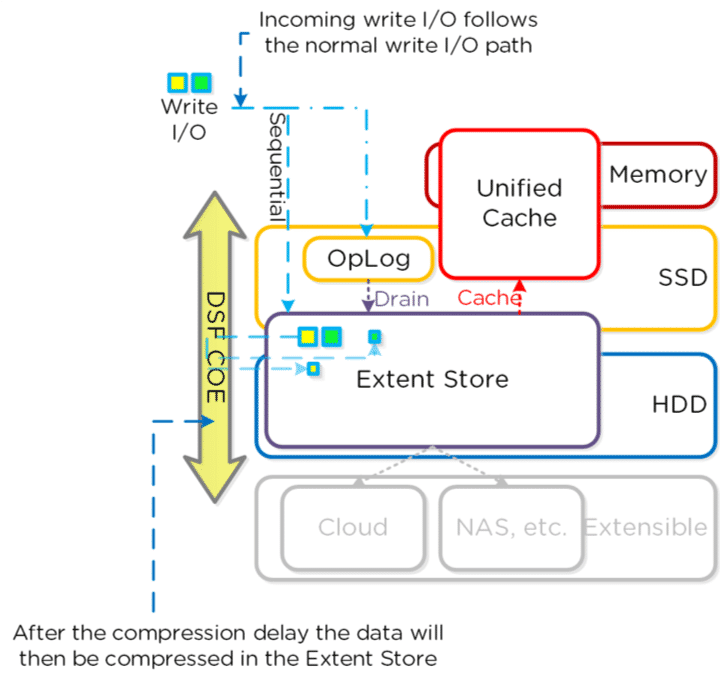

Storage Performance – Inline Compression The Nutanix Capacity Optimization Engine (COE)

- Writes are sequential when there is more than 1.5MB of outstanding write IO to a vDisk or an individual OP is > 64k.

- Default oplog size is 6GB per vdisk

- Compression = more oplog space per vdisk

- When reading compressed data:

- The compressed data is first decompressed in memory, then served to the hypervisor

- Frequently-accessed data is decompressed in the extent store and then moves to the cache

- Sequential Writes

- Compressed during write to Extent Store

- Random Writes

- Any Oplog write op greater than 4k is compressed

- Oplog Draining

- Decompressed, then aligned and compressed at 32k unit size

- RF Data

- Compressed prior to transmission to other CVMs

- Key Point:

- Sequential write OPs DO NOT BYPASS SSD. They bypass Oplog

- Other than space efficiency, there is no performance gain for post-process compression

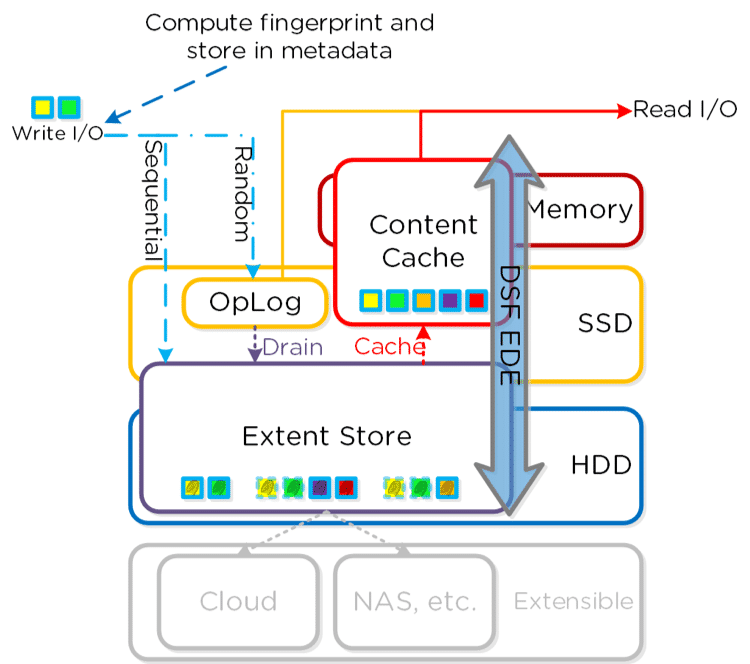

Storage Performance – Cache Deduplication – Nutanix Elastic Deduplication Engine

- No background scans required to re-read data to generate fingerprints

- Fingerprints are stored as part of the written block’s metadata

- Reference counts are tracked for each fingerprint – fingerprints with low reference counts are discarded

- Unified cache spans both CVM memory and a portion of SSD

Verifying Storage Efficiency – Prism

- Prism displays multiple data-reduction metrics on the Storage pane:

- Data Reduction Ratio and Savings – What the cluster is saving with configured data-efficiency methods

- Overall Efficiency – approximate efficiency possible with compression/dedup/EC enabled

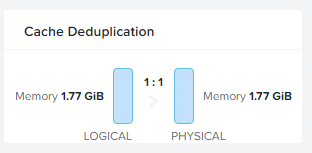

Cache Deduplication

- Shows cache (DRAM) and SSD savings due to deduplication

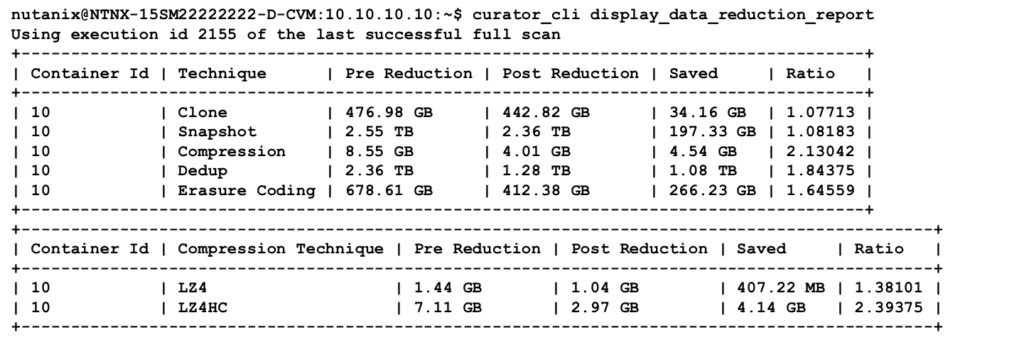

Verifying Storage Efficiency

curator_cli display_data_reduction_report

- The curator_cli command on the CVM provides per-technique data reduction statistics.

- This data is rolled up into the Capacity Optimization statistics in Prism

- Data is compressed on ingest using the LZ4 algorithm.

- As data ages, it is recompressed using the LZ4HC algorithm to provide an improved compression ratio.

- Regular data – No read/write access for 3 days.

- Snapshot data – No access in 1 day.