Differentiate between physical and logical cluster components

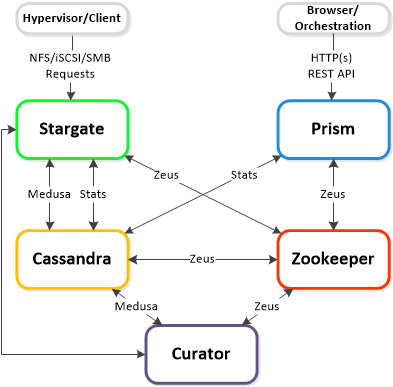

Cassandra

- Distributed metadata store, based on heavily modified Apache Cassandra

- Stores/manages all metadata in ring-like manner

- Metadata describes where and how data is stored on a filesystem

- Let’s system know which node/disk and in what form the data resides

- Runs on every node in the cluster

- Accessed via Medusa

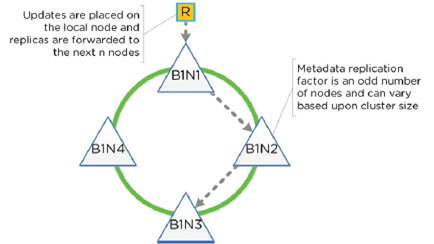

- RF used to maintain availability/redundancy

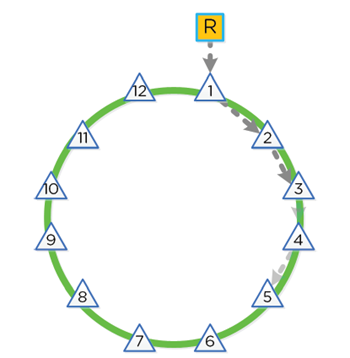

- Upon write/update, row is written to a node in the ring and then replicated to n number of peers (n depends on cluster size)

- Majority must agree before commit

- Each node is responsible for a subset of overall platform metadata

- Eliminates traditional bottlenecks (served from all nodes vs dual controllers)

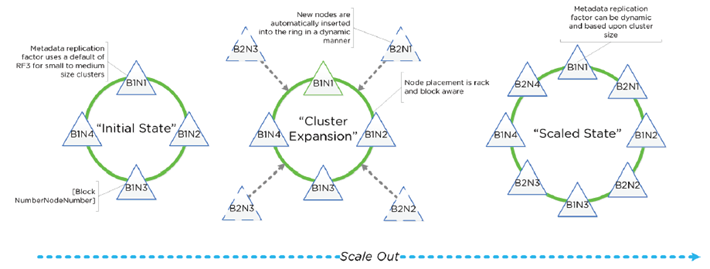

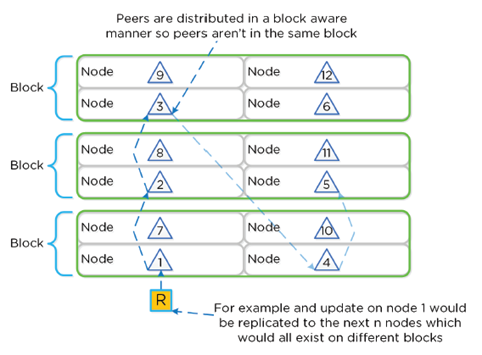

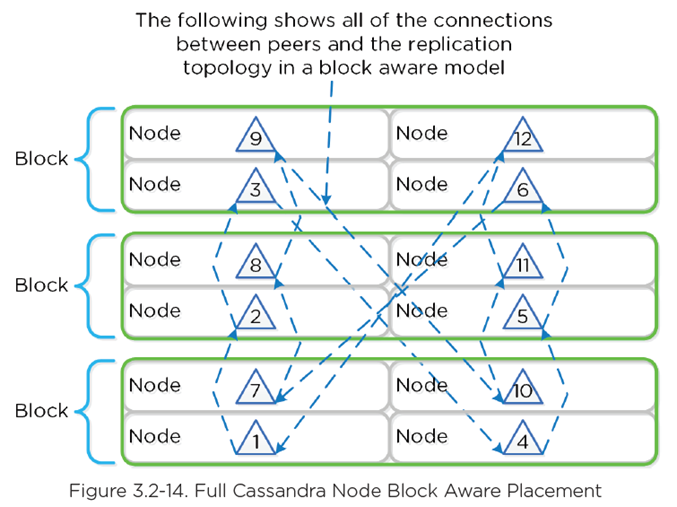

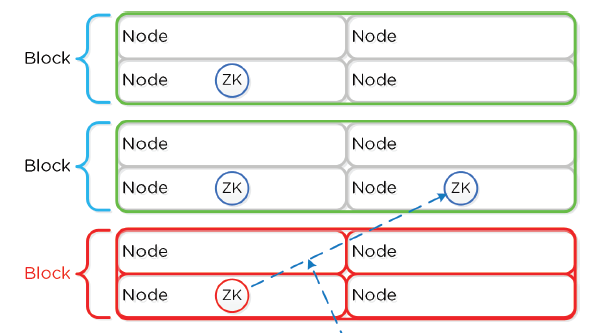

- When cluster scales, nodes are inserted for “block awareness” and reliability

- Peer replication iterates through nodes in clockwise manner

- With block awareness, peers are distributed among blocks to ensure no two peers are in same block

- If a block failure occurs, there are at least two copies of data available

Metadata Awareness Conditions

Fault Tolerance Level 1 (FT1):

Data is Replication Factor 2 (RF2) / Metadata is Replication Factor 3 (RF3)

Fault Tolerance Level 2 (FT2):

Data is Replication Factor 3 (RF3) / Metadata is Replication Factor 5 (RF5)

Zookeeper

- Cluster configuration manager, based on Apache Zookeeper

- Configuration including hosts/IPs/state/etc

- 3 Nodes in cluster; 1 leader

- Accessed via Zeus

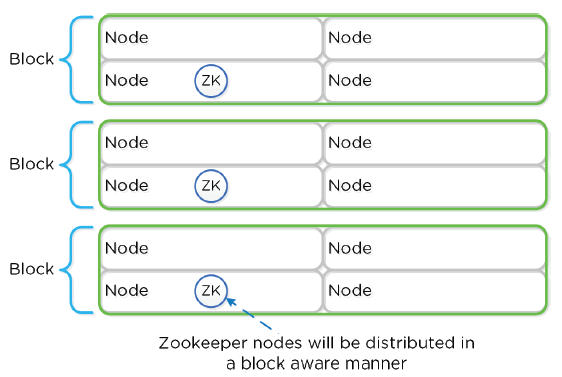

- Distributed in block-aware manner

- Ensures availability in event of block failure

- In the event of a Zookeeper Outage, the ZK role transferred to another node:

Stargate

- Data I/O Manager

- Responsible for all data management and I/O

- Main interface to hypervisors (via iSCSI, NFS, SMB)

- Runs on every node in the cluster

Curator

- Map reduce cluster management/cleanup

- Managing/distributing tasks throughout cluster

- Disk balancing/Proactive Scrubbing

- Runs on every node in the cluster; 1 leader

Prism

- UI/API

- Runs on every node in the cluster; 1 leader

Genesis

- Cluster Component/Service Manager

- Runs on each node, responsible for service interactions (start/stop/etc)

- Runs independently of cluster

- Requires Zookeeper

Chronos

- Job/task Scheduler

- Takes jobs/tasks from Curator scan and schedules/throttle amongst nodes

- Runs on every node in the cluster; 1 leader

Cerebro

- Replication/DR Manager

- Scheduling snapshots/replication to remote sites/migrations, failovers

- Runs on every node in the cluster; 1 leader

- All nodes participate in replication

Pithos

- vDisk Configuration Manager

- vDisk (DSF) configuration data

- Runs on every node in the cluster

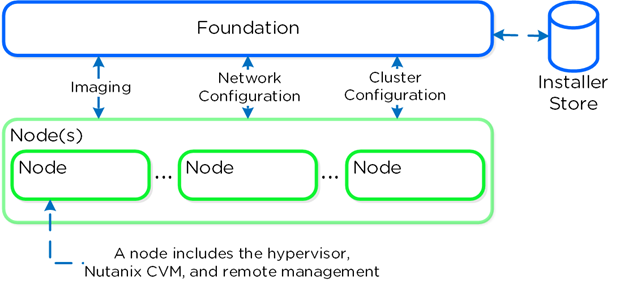

Foundation

- Bootstrap, Imaging, and deployment tool for Nutanix Clusters

- Imaging process will install the desired version of the AOS software as well as the hypervisor of choice

- AHV pre-installed by default

- Foundation must be used to leverage a different hypervisor

- NOTE: Some OEMs will ship directly from the factory with the desired hypervisor

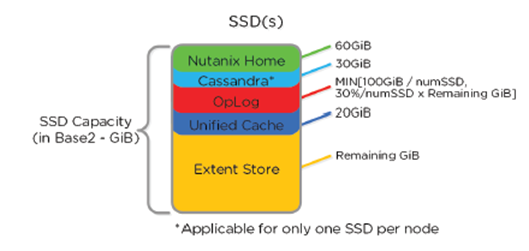

Disk Partitioning

SSD Devices

- Nutanix Home (CVM core)

- Cassandra (metadata storage)

- OpLog (persistent write buffer)

- Unified Cache (SSD cache portion)

- Extent Store (persistent storage)

- Home is mirrored across first two SSD’s

- Cassandra is on the first SSD

- If SSD fails, the CVM will be restarted and Casandra storage will be on second SSD

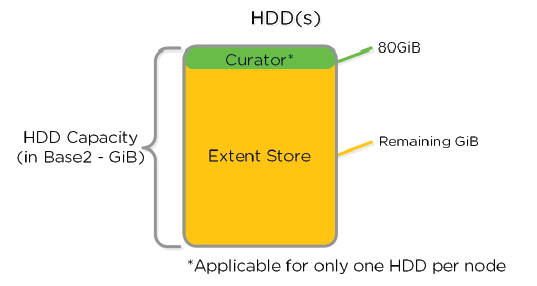

HDD Devices

- Curator Reservation (Curator Storage)

- Extent Store (Persistent Storage)