Getting Started with LXC

I am in the process of spinning up a new home lab, and I have a need for several Linux-based applications. Since I do not have a plethora of resources (and since I want to try something new), I plan to use containers. What better way to get started than with LXC!

What is LXC? LXC (short for Linux Containers) is an operating system level virtualization method for running multiple isolated Linux containers that share a single Linux kernel. There is a good read here which explains the features and components of LXC.

In order to provide connectivity to my containers, I need to first create a network bridge. There are two main types of bridges that can be used for connectivity: a Host Bridge and an Independent Bridge (aka masqueraded bridge). I won’t go into detail as to the differences between the two. For the purposes of my lab I’ll be using a Host Bridge, which allow my containers to connect directly to the host network.

There are plenty of great articles to create Linux bridges. For mine, I made a copy my current ifcfg-eth0 script with some minor edits:

cat /etc/sysconfig/network-interfaces/ifcfg-eth0 TYPE="Ethernet" NAME="eth0" DEVICE="eth0" ONBOOT="yes" BRIDGE="br0"

Note: The BRIDGE=”br0″ parameter sets what my physical eth0 interface is bound to.

cat /etc/sysconfig/network-interfaces/ifcfg-br0 DEVICE="br0" ONBOOT="yes" TYPE="Bridge" BOOTPROTO="static" IPADDR="192.168.133.129" PREFIX="24" GATEWAY="192.168.133.1" DNS1="192.168.133.1" STP="on" DELAY="0"

Initialize the bridge by restarting the network:

service network restart brctl show bridge name bridge id STP enabled interfaces br0 8000.000g69ae8810 yes eth0

Now on to installing LXC:

yum install epel-release yum install debootstrap perl libvirt yum install lxc lxc-templates yum install lxc-extra-1.0.11-2.el7.x86_64

Once installed, I start and enable the services for startup:

systemctl start lxc.service systemctl start libvirtd systemctl enable lxc.service systemctl enable libvirtd

Give the configuration a quick check with lxc-checkconfig:

Kernel configuration not found at /proc/config.gz; searching... Kernel configuration found at /boot/config-3.10.0-1127.8.2.el7.x86_64 --- Namespaces --- Namespaces: enabled Utsname namespace: enabled Ipc namespace: enabled Pid namespace: enabled User namespace: enabled Warning: newuidmap is not setuid-root Warning: newgidmap is not setuid-root Network namespace: enabled Multiple /dev/pts instances: enabled --- Control groups --- Cgroup: enabled Cgroup clone_children flag: enabled Cgroup device: enabled Cgroup sched: enabled Cgroup cpu account: enabled Cgroup memory controller: enabled Cgroup cpuset: enabled --- Misc --- Veth pair device: enabled Macvlan: enabled Vlan: enabled Bridges: enabled Advanced netfilter: enabled CONFIG_NF_NAT_IPV4: enabled CONFIG_NF_NAT_IPV6: enabled CONFIG_IP_NF_TARGET_MASQUERADE: enabled CONFIG_IP6_NF_TARGET_MASQUERADE: enabled CONFIG_NETFILTER_XT_TARGET_CHECKSUM: enabled --- Checkpoint/Restore --- checkpoint restore: enabled CONFIG_FHANDLE: enabled CONFIG_EVENTFD: enabled CONFIG_EPOLL: enabled CONFIG_UNIX_DIAG: enabled CONFIG_INET_DIAG: enabled CONFIG_PACKET_DIAG: enabled CONFIG_NETLINK_DIAG: enabled File capabilities: enabled

Looking good! Now I can configure LXC to use the newly created Host Bridge. Edit /etc/lxc/default.conf and change lxc.network.link to br0.

lxc.network.type = veth lxc.network.link = br0 lxc.network.flags = up

Now we can move on to creating our first container!

lxc-create -n centos01 -t centos

Start the container:

lxc-start -n centos01

And check the status:

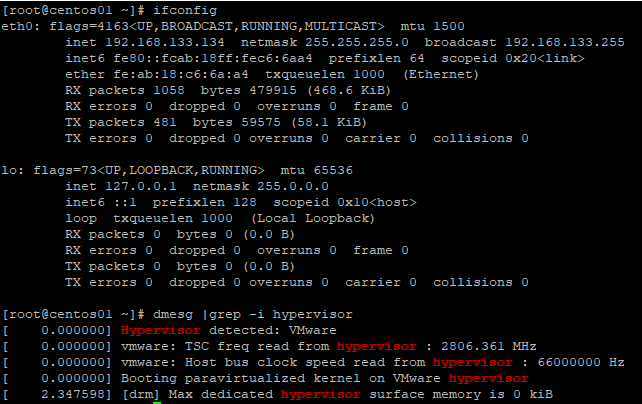

[root@lnx01 ~]# lxc-info -n centos01 Name: centos01 State: RUNNING PID: 1772 IP: 192.168.133.134 CPU use: 0.13 seconds BlkIO use: 0 bytes Memory use: 1.04 MiB KMem use: 0 bytes Link: vethN4UYM8 TX bytes: 1.39 KiB RX bytes: 1.87 KiB Total bytes: 3.26 KiB

And there we have it! LXC is up, and our test CentOS container is running on our host system.